This problem set will teach you some useful and common strategies for handling problems common in networking, including loss, delay, and low utilization. It also teaches you programming using threads and requires synchronization. The setting is a game called network pong.

- Classwide extension to Sat 12/12 11:59pm AoE (extension 1 day later)

Get the code

Start with the cs61-psets Git repository you used for Problem Set

5 and run git pull handout main to

merge our code, which is in the pset6 subdirectory, with

your previous work. If you have any “conflicts” from Problem Set 5, resolve

them before continuing further. Run git push to save

your work back to GitHub.

You may also create a new cs61-psets repository for this assignment. Don’t

forget to enter your repository URL on the grading server.

Once you have merged, edit the pset6/serverinfo.h file so that

PONG_USER is defined to a unique string that only you know.

Note. We currently do not plan to implement authentication on the pong server. This means that, if you learn another person’s string, you can mess with their heads. Please do not abuse this system. Aggressive abuse will be reported to The Authorities.

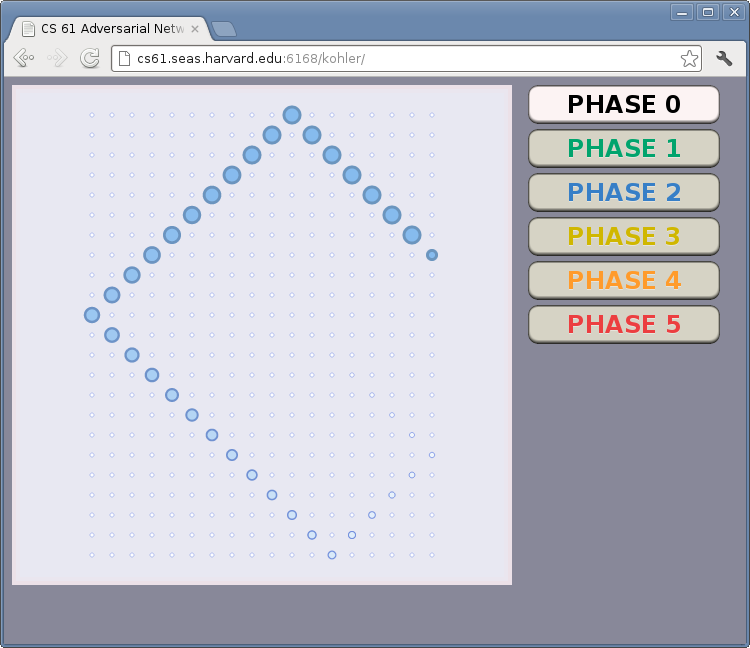

Type make, and then run the ./pong61 program.

It will print out a URL. Visit that URL in your browser. You should see a

bouncing ball in a rectangular field:

pong61 works by sending HTTP messages to a Web server we run. HTTP is the

application protocol on which the Web is built. Read more about HTTP before

you continue. When it starts up, pong61 makes a single request

to a URL like this:

http://cs61.seas.harvard.edu:6168/PONG_USER/resetThis tells the server to reset the pong board’s state. The server clears the board and returns a simple response containing the board’s width and height:

18 23Then pong61 makes many requests to URLs like this:

http://cs61.seas.harvard.edu:6168/PONG_USER/move?x=XPOS&y=YPOS&style=onThis request causes a new ball to appear at position (XPOS,YPOS).

The server responds with a numeric code and explanation. If everything

goes well, it says:

0 OKIf there’s a problem, the numeric code is negative, as in:

-1 x and y parameters must be numbersAfter each request, pong61 waits 0.1 seconds before making the next

request.

Our handout code runs each HTTP request in its own thread. The main thread spins to wait for each thread to complete before going on to the next. This works just fine on Phase 0. To do the problem set, you must change the code so it works on the other phases too, while introducing synchronization to keep things safe. Use the web page’s phase buttons to change phases.

Latency note. The

pong61server may report spurious errors if your network is on bad Internet or far away from thecs61.seas.harvard.eduserver. Check your distance by runningping cs61.seas.harvard.edu. Eddie’s home Internet reports latencies in the tens of milliseconds (e.g.,time=26.191 ms):kohler@Peachy ~ % ping cs61.seas.harvard.edu PING cs61.seas.harvard.edu (34.193.34.22): 56 data bytes 64 bytes from 34.193.34.22: icmp_seq=0 ttl=233 time=26.191 ms 64 bytes from 34.193.34.22: icmp_seq=1 ttl=233 time=26.421 ms 64 bytes from 34.193.34.22: icmp_seq=2 ttl=233 time=23.061 msIf you frequently see loss or latencies greater than 100–200ms, spurious errors are likely. To work around this:

- Update your code using

git pull handout main, then run./pong61with a-l LATENCYargument. This asks the server to treat your client more leniently. For instance, ifpingreports a 500ms latency, try./pong61 -l 500.- Use the grading server’s “Check” buttons to check for errors without worrying about latency.

Phase 1: Loss

In Phase 1, the server starts to lose messages. It will occasionally

go offline for a short period. During that time, every move request is

rejected by closing down the connection. The

http_connection::receive_response_headers() function sets conn->cstate to

cstate_broken and conn->status_code to -1 when this happens, but

the pong thread ignores this problem and continues as if everything was

fine. That position in the pong trail never gets filled in. The server

shows this mistake by drawing black marks in the spaces.

Your job in this phase is to detect lost messages and retry. When the server drops a connection, your code should close that connection (to free its resources) and make a new connection attempt at the same position. It shouldn’t move to the next position until the server responds for the current position.

However, you must be careful not to bombard the server while it is offline. The server will notice this and explode. Instead, you must implement a form of exponential backoff. This is a simple, powerful concept.

- The first time a connection attempt fails, wait for K seconds before trying again. A good initial value for K is 0.01 sec; it shouldn’t be too long—1 sec is way too large.

- If the retry also fails, wait 2K seconds before trying again.

- If that retry also fails, wait 4K seconds before trying again.

- In general, after N failed retries, wait 2NK seconds before trying again. (You may want to introduce a maximum backoff; perhaps you would wait min(2NK, 128) seconds before trying again.)

Exponential backoff is awesome because it responds to short outages quickly, but imposes only logarithmic overhead (i.e., the number of messages sent during the outage is logarithmic in the length of the outage). It’s ubiquitous: Ethernet is built on it, and the next time your Gmail goes offline, check out the numbers that appear after “Not connected. Trying again in...”.

Hint. Implement one phase at a time, always thinking how you could accomplish the task in the simplest correct way. Avoid overengineering! Our solution set implements all phases, without race conditions, in less than 60 lines of code.

Phase 2: Delay

In Phase 2, the server delays its responses. It will send you the full header for its response, but delay the body. Since the handout loop waits for the body before sending the next request, the pong ball will move extremely slowly in Phase 2. Too slowly, in fact: the server enforces a minimum ball speed, and when your code is slower than that speed, you’ll see some black marks on the display.

You might think solving this problem would be easy: just close the connection before the response arrives. But the server is too clever for this. If you close a connection prematurely, it explodes.

To support delay, your pong61 must handle multiple concurrent

connections to the server. Now, the main thread may need to spawn a new

thread before the response arrives! But watch out. If you leak

connections, the server will explode.

Your Phase 2 code must also work in Phase 1. We suggest you make Phase 2 work first on its own, then go back and make Phase 1 work again.

Phase 3: Utilization and Synchronization

So far, your pong61 client opens a new network connection for every

ball. This is wasteful and slow and in Phase 3 the server will not allow

it. You should instead reuse valid HTTP connections for new ball

positions.

An http_connection object is available for reuse if and only if

conn->cstate == cstate_idle. This means that the server sent a

complete response and is waiting for another request.

Reusing connections would be really easy—except that in Phase 3 the

server also drops some connections (as in Phase 1) and delays some

connections (as in Phase 2). Your pong61 client must handle it all,

and you must use synchronization primitives correctly.

The key function you’ll need to add is a connection table of available

connections. This can be a linked list, an array, a std::list or

std::deque, or whatever you’d like. When a connection reaches state

cstate_idle, add it to the table. When you need to contact the server

(either for the first time in a connection thread, or after some exponential

backoff), check the table first, and use that connection if one exists.

Make sure that you protect your connection table from concurrent access!

There should be no race condition bugs. Use synchronization objects to

handle this, and make SAN=1 to check your work. You should simultaneously

remove the unsafe global move_done variable and replace it with a

synchronization object.

Note. Please see the lecture notes and section notes for more on synchronization objects. Do not use

std::condition_variablefor this pset; usestd::condition_variable_anyinstead.

Phase 4: Congestion

In Phase 4, the server sometimes behaves as if it is congested and asks the

client to cool down for a while. A congested server responds to a request not

with 0 OK, but with a positive number indicating the number of

milliseconds the client should pause. For instance, this response:

+1948 STOPmeans the client must pause for 1948 milliseconds, and not send any RPCs

during that time. The display will show a stop sign during this pause, and if

pong61 ignores the pause and sends the server another RPC, the server will

explode. But once the pause ends, the client should go right back to sending

requests.

Delay, as in Phase 2, can apply to congestion responses too. Your client

threads should pause once the whole response is available (i.e., after

http_connection::receive_response_body returns).

The thread that detects congestion should use a synchronization object to block RPCs from other threads.

Phases 1 through 3 are still active in Phase 4. Phase 4 may catch some race conditions in your code from Phase 3.

Blocking note. In most cases, a thread should unlock all locks before blocking, since this allows other threads to run. For instance, if your main thread holds a lock or mutex, it should unlock that lock or mutex before calling

usleep(delay). However, it is OK to hold a lock while blocking when the explicit intent is to stop other threads from running, as in cool-down periods.

Race condition note.

STOPhandling involves some inherent race conditions because the network can delay messages, but you should minimize race conditions involvingSTOPin your own code. Once a thread detects a congestion response, that thread should very quickly block other threads for the indicated number of milliseconds. One common error involves a synchronization plan that delays the start of the congestion period. For instance, if your server is exploding due to new requests that are received approximately 100 milliseconds (0.100 sec) into the STOP period, you should make sure that no thread has blocked for 100 milliseconds while unnecessarily holding a shared mutex.

Phase 5: Evil

Phase 5 is a mystery, but run it and you’ll figure out the problem soon enough.

Phase 6: Proxy

In Phase 6, you will contact the server using a proxy, which is a relay between your client and the server. Your job is to pick the correct proxy.

A proxy is an intermediary between a client and a server. The client sends requests to the proxy, which examines and possibly modifies those requests before forwarding them to a server. The server’s response goes to the proxy, which forwards it back to the client. Proxies are used to improve performance, privacy, and security for client-server communication. For instance, a proxy might prevent a browser from visiting certain websites, or might forward connections to the closest or fastest server containing the desired data.

We have provided proxy code for you in proxypong61.cc. Running

./proxypong61 will start PROXY_COUNT different proxies, listening on ports

PROXY_START_PORT through PROXY_START_PORT + PROXY_COUNT - 1 (or 6161

through 6164). One of these proxies responds to requests much faster than the

other ones—that’s the one you want.

Your job is to support the -x proxy option in pong61. In our handout code,

pong61 -x always connects to the first proxy. Instead, your code should:

- Connect concurrently (i.e., in parallel) to all

PROXY_COUNTproxies. (Uselookup_tcp_server(pong_host, pong_port + PROXYNUM)to look up the server address for proxyPROXYNUM.) - Send a

queryrequest, such asquery?x=0&y=0, to each proxy, receive response header and body, and measure the latency of the entire response. - Record the

addrinfoand port number of the fastest proxy and use that proxy for all future connections (including theresetrequest).

The server will explode in two cases: (1) if your proxy measurement takes too long (not done concurrently); (2) if you use an incorrect proxy any time after the measurement phase.

Phases 1 through 5 should still run correctly with the proxy option.

Hints and advice

For full credit, your code must not suffer from race condition bugs. You’ll

need to think this through, as race conditions may not show up

during normal testing. ./pong61 should run cleanly with thread sanitizers.

The ./pong61 -f flag, which runs pong61 faster than normal, might be

useful as you look for race conditions.

We are only concerned with race conditions inside your client (i.e., between different client threads). We are not concerned with rare issues with scheduling between the server and the client, such as network reordering. It is impossible to avoid all client–server race conditions in this pset. But as usual, your code should never execute undefined behavior.

Extra credit

Dynamic proxy selection. In -x mode, your code could periodically check

every proxy’s latency in case the fastest proxy has changed.

Game. Implement something fun. For example, two teams could get together and implement Space Invaders (one team programming the monsters, and one team programming the spaceship)! Here are some RPCs the server implements that might be useful.

- Your fun mode should run when

pong61is given the-nflag (inmain, this is indicated bynocheck == 1). This flag turns off the server’s checking facilities. Without the-nflag, your client should run in normal mode. - You can query the state of a cell with a

query?x=XPOS&y=YPOSRPC. - You can set a cell to a new state for a given duration with a

move?x=XPOS&y=YPOS&style=STYLE&duration=MILLISECONDSRPC. This only works innocheckmode. You can also append&fade=MILLISECONDSto the RPC to control how quickly the cell fades out (the fadeout defaults to 4 seconds). STYLEhas a number of interesting possibilities. See if you can figure out what they are!- You can query multiple cells with a

query?coords=STRRPC, and set multiple cells’ states with amove?coords=STRRPC.STRshould consist of X,Y coordinate pairs separated by spaces or commas. - There is also a

cmpxchg?x=XPOS&y=YPOS&expected=STYLE&style=STYLE...RPC.