Overview

In this lecture, we discuss the primary system calls shells use to arrange inter-process communication.

Full lecture notes — Textbook readings

API design

- Many computer systems offer their users an API (application programming interface)

- C++ offers its programmers a standard library

- Kernels offer processes system calls

- An important programming skill is learning to use an API to accomplish a task

- Solve a puzzle with the pieces you’re given

- Another important programming skill is learning how to make an effective API

- Simple to use

- Powerful (can accomplish many tasks)

- Fast (or can be used in an efficient way)

- Not too hard to maintain

- Connecting these skills: Why did an API’s designers choose that API?

- Why these system calls?

- Sometimes a satisfying answer!

- Open new ways of thinking

Primary process control system calls

- Create a process:

fork - Run a program:

execfamily - Exit a process:

_exit - Stop a process:

kill - Await completion:

waitpid

Inter-process communication (IPC)

- Processes are isolated

- But wholly isolated processes could not cooperate

- We have at least one IPC channel:

_exit! How fast is it? - Need high-bandwidth channel

Primary IPC system calls for shells

- Create a connected pair of file descriptors:

pipe - Rearrange file descriptors:

dup2

The pipe system call

pipe(int pfd[2])system call- Creates a pipe, which is a high-throughput communication stream

- The pipe is assocated with two descriptors,

pfd[0]andpfd[1] - Data written to

pfd[1](the “write end”) may be read frompfd[0](the “read end”) - Returns 0 on success, -1 on failure

pipe illustration

selfpipe

Pipe privacy

- We sometimes want isolated communication among specific processes

- Process P sends messages to process Q

- Other processes cannot interrupt this communication

- Consider file system files

- Can be read or written by name

- Any process can modify a file by name

- Pipes are unnamed: a process can only access pipe ends listed in its file descriptor table

- Generally requires that a parent process create the pipe, then pass to child processes through cloning the environment

- (Although file descriptors can be sent in messages too)

Pipe throughput

- Why are pipes high-throughput?

- The in-kernel buffer supports batching

- Bytes written to a pipe are buffered in the kernel until read

- How many bytes?

Pipe end-of-file

- A read from a pipe returns end-of-file when all file descriptors for the write end are closed

- A write to a pipe terminates the writer when all file descriptors for the read end are closed (more later)

- Pipe hygiene: Clean up pipe ends when no longer needed

childpipe and dangling ends

Pipes and the shell

- Have a shell process,

sh - Want to set up a pipeline,

/bin/echo 61 | wc -c

Problem: How to move file descriptors around?

dup2

dup2(oldfd, newfd): Makenewfdpoint to the same file description asoldfd- If

oldfdis not open, returns -1 - If

newfd == oldfd, does nothing; else closesnewfdfirst

- If

Pipe dance

- Reach this goal using the system calls you know!

Pipe dance explained

-

Here’s the initial state of the shell.

-

Child processes inherit file descriptors from their parents only at

forktime. Since pipes must be accessible to children in the pipeline, the pipe must be created first, before the child processes. -

Now the pipe exists, we can create the left-hand child. It is still running shell code.

-

We switch our attention to the left-hand child. Inside the code for the child (

if (result_of_fork == 0) { ... }), we must reconfigure the process environment to match the requirements of the pipeline. We start by setting up the standard output file descriptor withdup2(pfd[1], 1)(recall that standard output’s file descriptor number is1). It’s important not to do this in the parent shell—the parent needs itsstdout! -

That done, we no longer need the

pfd[1]file descriptor… -

…or the

pfd[0]file descriptor. (Not all these steps are order-sensitive—we could have closedpfd[0]first.) -

Now the child’s environment matches all requirements, it is safe to replace its program image via

execvp. We must set up the environment before callingexecvpbecause the new program,/bin/echo, can run in many environments and simply accepts its initial environment as a given. An advantage of this separation of concerns is that the shell does not rely on/bin/echo(a program it does not control) to perform thedup2/closesystem calls correctly. -

The left-hand child is on its merry way and is no longer running shell code. (It may already execute, writing its output into the pipe buffer!) We switch our attention back to the parent shell, which has been continuing in the meantime.

The pipe’s write end is no longer needed once the child on the left-hand side of that pipe is forked. Once a file descriptor is no longer needed by the parent shell or any of its future children, it’s safe for the parent to close it with

close(pfd[1]). (You could close that file descriptor later, too, but that might affect future child processes: remember that all redundant file descriptors to pipe ends require closing.) -

The parent can now create the right-hand child. In your code, this will typically happen with another call to

command::run. You’ll need to figure out how to pass the read end of the pipe to that call. -

The right-hand child now proceeds to reconfigure its environment, first with

dup2, … -

…and then

close. -

The child’s environment matches our goal, so the child calls

execvpto replace its program image with the new program. -

Meanwhile, the parent shell closes its now-redundant read end of the pipe with

close. -

We have arrived at our goal: a two-process pipeline. (Can you work out how to set up a three-process pipeline on your own?)

Pipe philosophy

-

The pipe abstraction, and especially its instantiation in shells, is a fundamental innovation in the way programs could be combined

-

Pipes are a key enabler of the “Unix philosophy” of minimalist, modular software

-

The idea of a pipe predates Unix!

“Expect the output of every program to become the input to another, as yet unknown, program. Don't clutter output with extraneous information. Avoid stringently columnar or binary input formats. Don't insist on interactive input.” … “This is the Unix philosophy: Write programs that do one thing and do it well. Write programs to work together. Write programs to handle text streams, because that is a universal interface.”

Literate programming

- Literate programming: a program is developed alongside a series of small essays that describe the program’s operation

- Modern “notebook” programming, like iPython or Observable, derive from literate programming in that they integrate programs with documentation

- Designed for monoliths, not components: the program is the documentation

- A reusable component has a separate interface

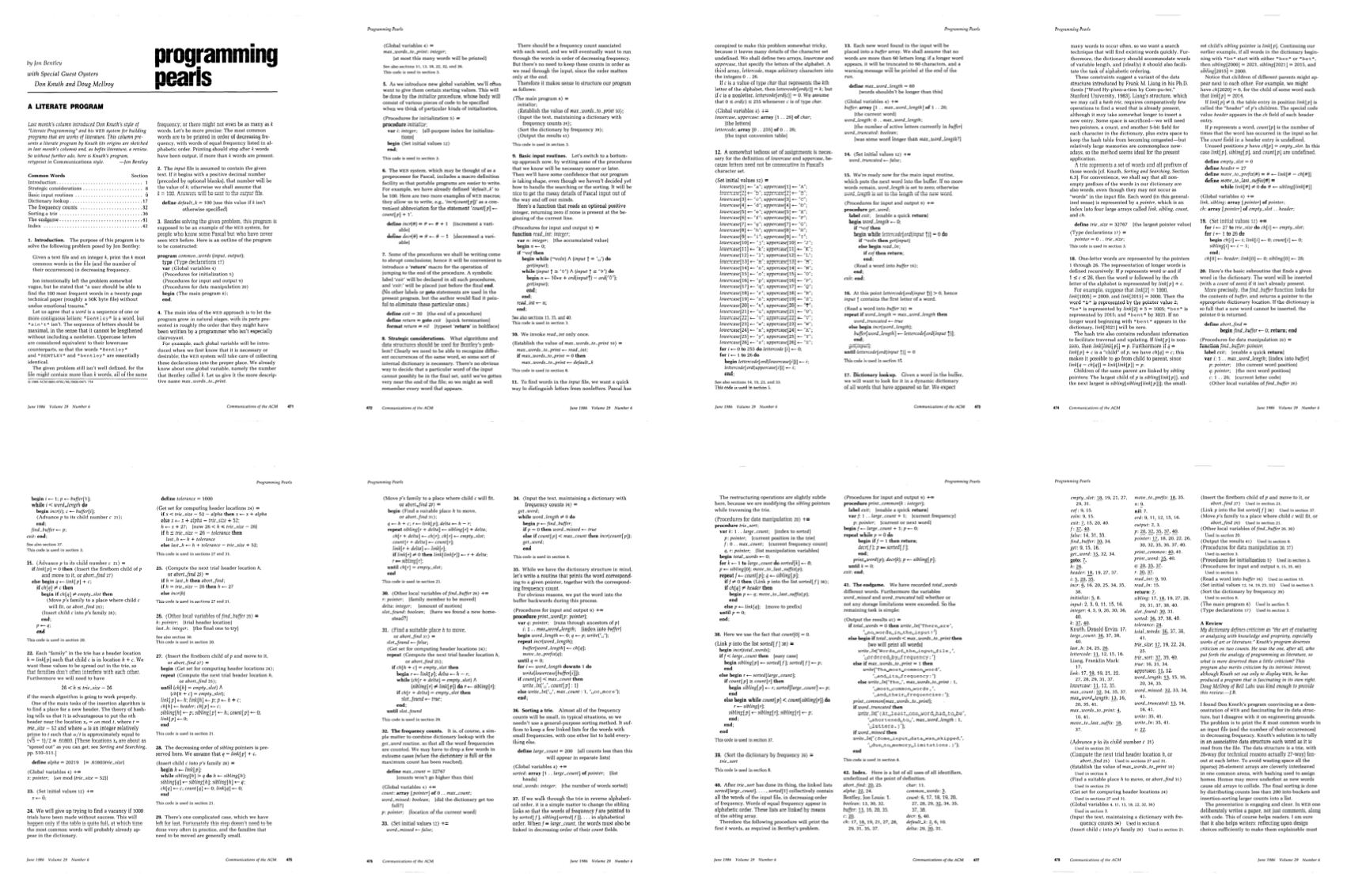

The literate programming challenge (1986)

“Given a text file and an integer k, print the k most common words in the file (and the number of their occurrences) in decreasing frequency.”

Jon Bentley; source

Donald Knuth’s solution

Doug McIlroy’s critique

tr -cs A-Za-z '\n' |

tr A-Z a-z |

sort |

uniq -c |

sort -rn |

head -n $1

“The … shell script was written on the spot and worked on the first try. … Knuth has shown us here how to program intelligibly, but not wisely. I buy the discipline. I do not buy the result. He has fashioned a sort of industrial-strength Faberge egg—intricate, wonderfully worked, refined beyond all ordinary desires, a museum piece from the start.”